Decoding the Web of Belief

There are no facts, only interpretations.

“There are no facts, only interpretations.”

— Friedrich Nietzsche

Note: I've written this piece to be as non-technical as possible. For my non-technical readers, this piece should contain everything you need to understand the project from start to finish.

For those interested in the technical details, all of the code used to create the graph is located in this code repository. The README file contains instructions for sourcing the data, decompressing it, cleaning it, extracting entities from text using the REBEL model, leveraging your GPU with pytorch and constructing the graph.

With some slight modification, the contents in the repository can be used to construct knowledge graphs from any collection of subreddits, or really any unstructured data source with a similar format.

Table of Contents

- Conception

- Mapping The Territory

- Where Theories Live

- Harvesting The Data

- Extracting Ideas

- Grounding Knowledge in Reality

- Cartography Meets Psychology

- Analysis of a Collective Conciousness

- Our Voyage Concludes

- Learn More

Conception

During a morning walk in mid 2024, I had a simple thought:

"If you could create a physical map of all conspiracy theories and how they are related, what would it look like?"

At the time, I had just finished research on building knowledge graphs from unstructured data. If it was possible to construct graphs from scientific publications, it seemed reasonable that similar, analytical approaches could work for any unstructured source. Years prior I attended a talk by Simon DeDeo at an event hosted by the Santa Fe institute. He shared research findings on how humans construct explanations and how those explanations can go wrong. I’ve long been interested in extreme expressions of human behavior: historical tragedies, war, peak physical performance, and non-conformist belief systems. The idea of compiling a map of the most extreme explanations we’ve created about the world fascinated me.

So I set out to build a graph of conspiracy theories, unsure whether the results would be insightful or incoherent, but confident the process itself would be interesting. This project is technical in nature, spanning data engineering, natural language processing, large language models, the mechanics of knowledge graphs, named entity recognition, relation extraction and applied mathematics. I walk through the work end to end, from raw source data to the constructed graph, and then step back to reflect on what, if anything, the structure of the graph reveals.

Mapping The Territory

Before setting out on this journey, it’s important to be clear about the destination. I knew what I wanted to create from the beginning, I just wasn’t sure how well it would work.

I wanted to build a graph. Not a chart, the kind most of us remember from math class, but a graph. The kind of graph used in graph theory, sociology, biology, and other fields. This wasn’t new territory for me; I’d built graphs from unstructured data before in my professional work.

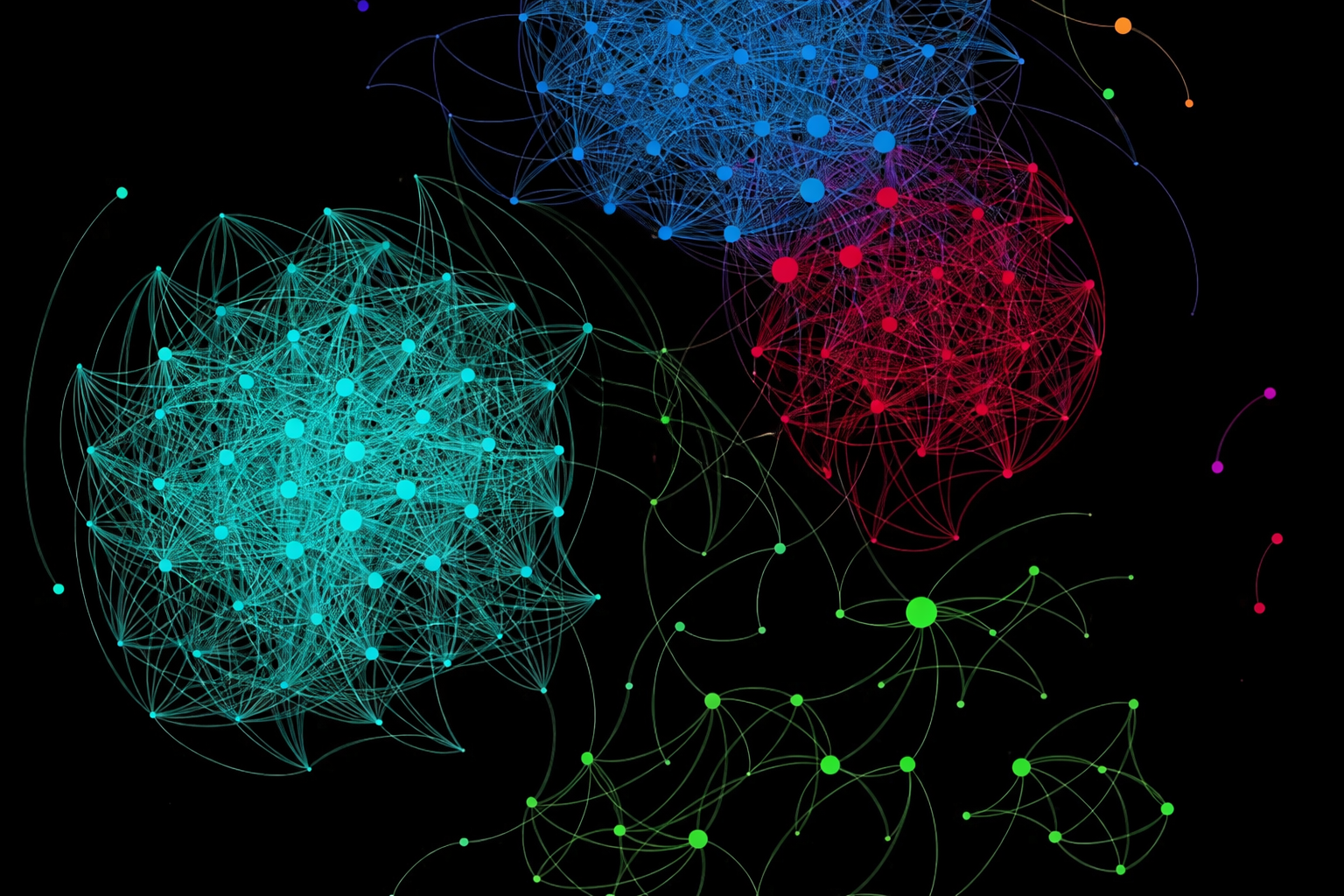

In discrete mathematics and graph theory, a graph is a structure consisting of a set of objects where some pairs of the objects are related. The objects are represented by circles called nodes, vertices or points. The relationships between the nodes are called edges or links.

This photo is an example the structure we are seeking to create, where the nodes are "ideas", the "edges" are the relationships between them and the colors are "clusters" of ideas.

At its core, a graph is made up of nodes and edges. Nodes represent things, while edges represent the relationships between those things. In this case, I wanted the nodes to represent ideas, the building blocks of conspiracy theories. These might be things like JFK, the Illuminati, and so on. The edges would describe how those ideas relate to one another, with each relationship assigned a strength ranging from 0 for a very loose connection and 1 for a very strong one.

Finally, I wanted the graph to form clusters. Groups of closely related ideas would naturally cluster together and be color-coded accordingly. For example, a 9/11 cluster might include concepts like jet fuel, George W. Bush, world government, the CIA, and al-Qaeda.

The Internet Mapping Project was launched in 1997 by William Cheswick and Hal Burch at Bell Labs. Since 1998, it has collected and preserved traceroute-style paths to hundreds of thousands of networks on an almost daily basis.

A key part of the project was the visualization of this data, shown above as a graph. These Internet maps made the structure of the network visible in a way that raw data never could, and they were widely disseminated as a result.

A graph like this can tell us a lot. It can reveal which ideas are most closely related, based on the strength of the connections between them. It can show which ideas are the most central or influential, indicated by nodes with the greatest number of connections. And it can even surface distinct conspiracy theories themselves, emerging naturally as clusters within the graph.

A graph like the one shown above becomes our map. It’s the structure that helps us navigate the landscape of ideas, and it’s exactly what we’re trying to build.

Where Theories Live

Conspiracy theories are, at their core, ideas. To map them in the aggregate, those ideas must first be captured and combined in some concrete form. Many philosophers including Plato, Thomas Hobbes, Hegel, and Wittgenstein, have emphasized the deep entanglement between thought, speech, language, and writing. If ideas are inseparable from the language that gives them shape, then collecting language becomes a way of collecting thought itself. As Wittgenstein put it in the Tractatus Logico-Philosophicus, “The limits of my language mean the limits of my world.”

If we accept the assumption that written text is strongly correlated with thought, then text becomes an ideal substrate for constructing a knowledge graph of conspiracy theories. The task, then, is to assemble a corpus that captures how these ideas are articulated, debated, and refined in the wild.

The School of Athens by Raphael. A Renaissance fresco depicting an idealized public square of reasoned debate. What would our ancestors make of the migration from physical spaces to virtual forums online? How would they respond to the fractured sense-making and competing explanations of the world that dominate our discourse today?

An effective corpus for this purpose should have three core properties. First, it must be tightly focused on conspiracy theories, with language bounded to the subject to ensure a high signal-to-noise ratio. Second, it should present discourse rather than monologue. It should contain presentation, discussion, and debate that mirror a public square in which claims are proposed, challenged, defended, and elaborated. Finally, the corpus must be both large and temporally broad. The greater the volume of text and the longer the time horizon it spans, the more robust and representative the resulting map becomes.

Reddit satisfies these requirements particularly well. The platform is organized into topic-specific communities called subreddits, each functioning as a loosely defined forum centered on a shared interest. Some subreddits focus on news, others on casual reflection or technical explanation, but all share a common structure: posts followed by threaded discussion. This structure allows discourse to unfold naturally over time, often across many years and millions of contributions.

For conspiracy theories specifically, I selected four subreddits:

- r/conspiracy

- r/conspiracytheories

- r/conspiracy_commons

- r/conspiracyII.

Together, these communities offer sustained, focused discussion spanning more than a decade.

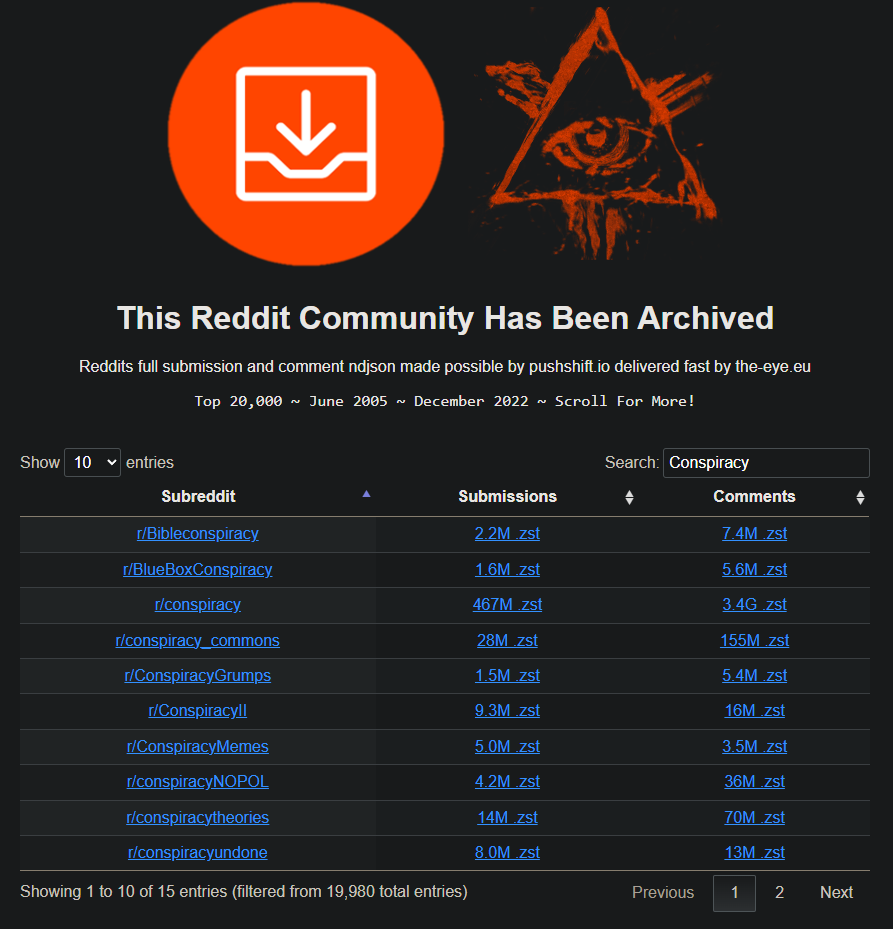

Using “The Eye” a dataset containing posts and comments from the top 20,000 subreddits over nearly two decades, I extracted all available content from these four communities. This corpus, covering years of claims, arguments, and reinterpretations serves as the raw material for constructing the conspiracy theory knowledge graph.

Harvesting The Data

With our ideal conspiracy theory map and data source defined, the next step is to actually acquire the data and prepare it for analysis. To do this, we navigate to the Reddit archive and manually download eight files: one file containing posts and another containing comments, for each of the four subreddits.

A screenshot from the archive.

These files are compressed, meaning their data structure has been altered to reduce file size. I wrote custom code (modified from the zreader utility, provided by the creators of the Reddit archive) to uncompress the files and extract only the data we care about.

Because the source files are extremely large, the code must process them as a stream rather than uncompressing them all at once. It works through each compressed Reddit file in chunks, decompressing a portion at a time, extracting the relevant values, and writing them to a new file. Attempting to uncompress an entire file at once would exceed available memory and cause the process to fail.

For each post, the following values were extracted:

- title: The title of the post

- selftext: The text for the body of the post

- num_comments: The number of comments on the posts

- ups: The number of upvotes

- downs: The number of downvotes

- score: The net score between up and downvotes (i.e. ups - downs)

- created_utc: The timestamp the post was created, denoted in UTC

- permalink: The Reddit URL to the post

For each comment, the following values were extracted:

- author: The author of the comment

- body: The comment text

- ups: The number of upvotes

- downs: The number of downvotes

- score: The net score between up and downvotes (i.e. ups - downs)

- created_utc: The timestamp the post was created, denoted in UTC

Here is an example of a single post stored as a line in one of the output files:

{

"title": "What if BitCoin was created by the NSA for free computing power to decrypt anything ecnrypted with SHA-256?",

"body": "Or potentially some other kind of decryption capabilities?",

"number_of_comments": 4,

"upvotes": 11,

"downvotes": 0,

"score": 11,

"created": 1386335177,

"reddit_url": "https://www.reddit.com//r/conspiracytheories/comments/1s8n0r/what_if_bitcoin_was_created_by_the_nsa_for_free/"

}

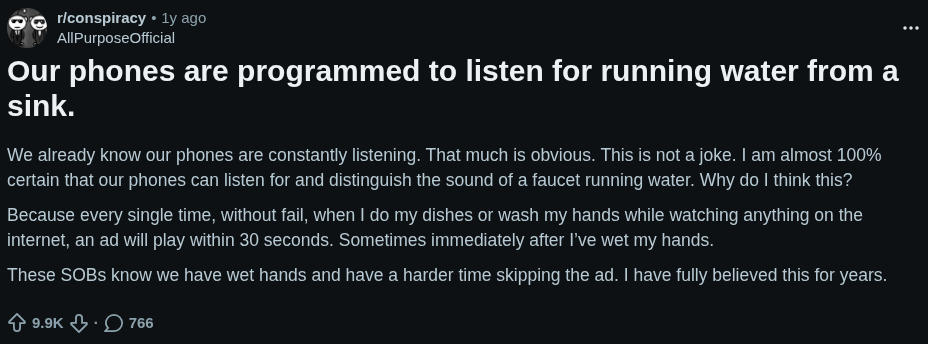

A post from r/Conspiracy on Reddit. The title is in bold and the body describes in more detail the posters thoughts and evidence about his phone listening to him. In the bottom left you can see the total net number of votes. Upvotes and downvotes are not individually shown. The 768 comments will be contained in the "comments" file for this subreddit.

Now, remember that our goal is to extract ideas from the text of these posts. That means there needs to be meaningful text present in the title, the body, or ideally both. After reviewing the data files, I identified three cases where a post or comment should be removed from our text corpus:

- Comments made by AutoModerator, a bot that performs automated actions and is not part of the conspiracy theory discourse.

- Posts where the title and body are too short to convey a meaningful idea—for example, a post with the title “CIA” and the body “They are watching.”

- Posts or comments that have been deleted but remain archived in the dataset. These appear as "[deleted]" and contain no usable content.

A second set of code iterates through the uncompressed data and removes any posts or comments that meet these criteria. Once this filtering step is complete, the remaining text is ready to be processed for extracting the “ideas” that will form our conspiracy graph.

Extracting Ideas

The next task is to extract ideas and relationships from the text so they can serve as the building blocks of our conspiracy graph. In natural language processing, this breaks down into two core tasks:

- Named Entity Recognition

- Relation Extraction

While the terminology sounds technical, the concepts themselves are fairly intuitive.

Named Entity Recognition (NER) refers to identifying words or phrases in a sentence that belong to a specific category, such as people, places, or organizations. For example, imagine we want to identify people in the following sentence:

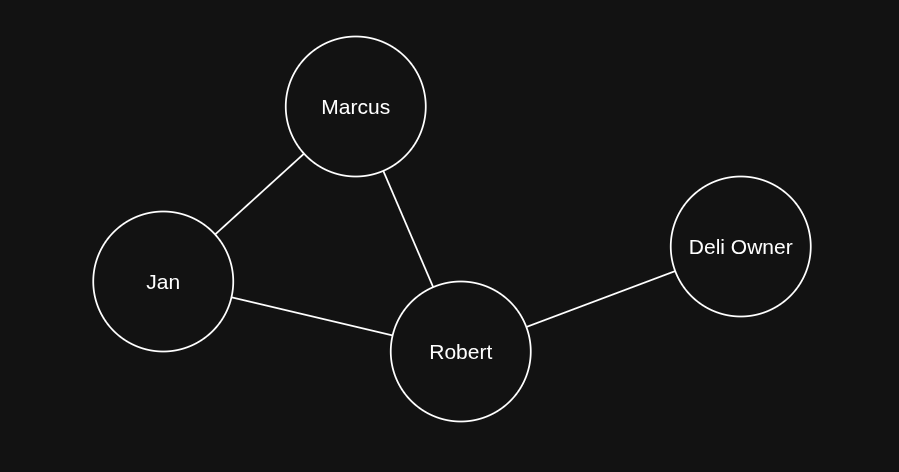

Jan and Marcus walked to the deli for lunch and were surprised to see their old coworker Robert talking to the deli owner there.

The named entities in this sentence are:

- "Jan"

- "Marcus"

- "Robert"

- "Deli Owner"

Relation extraction (RE) builds on this by identifying how those named entities are connected to one another. From the same sentence, we could extract the following relationships:

- "Jan", "and", "Marcus"

- "Jan", "old coworker", "Robert"

- "Marcus", "old coworker", "Robert"

- "Robert", "talking to", "deli owner"

Each of the items above is called a "triple". A single triple consists of a subject (or Head), a predicate (or relation) and an object (or tail). An example from above is:

- Subject: "Robert"

- Predicate: "talking to"

- Object: "deli owner"

If we used these triples to construct a graph, it would look something like this:

Named entity recognition and relation extraction are easy to grasp and fairly straightforward to perform by hand. The real challenge lies in doing them reliably, at scale, across millions of Reddit posts and within a realistic amount of time.

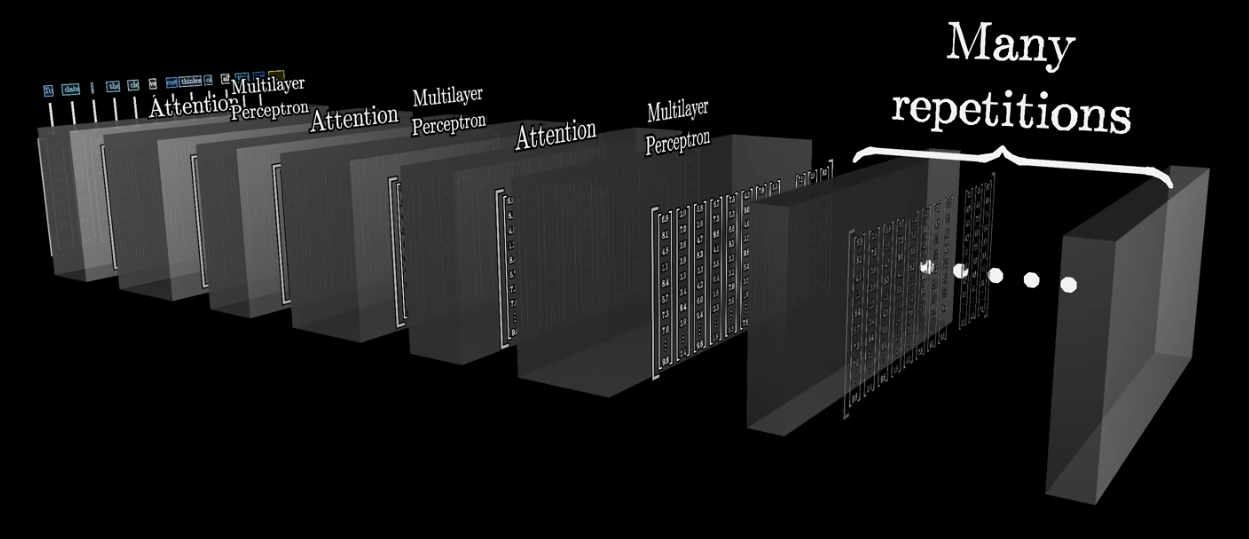

Thankfully, there is an open-source transformer model trained specifically for this task. Transformer models are a class of machine-learning models. They have the same underlying architecture used by ChatGPT, Claude, and other large language models. The term “transformer” refers to the mathematical framework that powers them, and it turns out to be especially effective at language-centric tasks.

The YouTuber 3Blue1Brown has a phenomenal playlist on machine learning that walks you through the basics of machine learning, transformer models, attention and the underlying mathematics. I've linked it at the bottom of this piece.

Researchers at Babelscape, the Italian tech company behind this model, trained a transformer to perform both named entity recognition and relation extraction. In simple terms, they fed the model large numbers of example sentences along with the correct entities and relationships, allowing it to learn how to extract them on its own, instead of doing it by hand.

The model isn’t perfect, and we’ll apply additional analytics and cleanup steps to refine its output. Still, it’s more than sufficient for extracting the ideas and relationships that underpin conspiracy theories.

The final constraint was time. I ran the model on an old gaming PC equipped with a powerful graphics processing unit (GPU). Modern machine-learning libraries can leverage GPUs to run transformer models far faster than a standard CPU, and the difference is dramatic. After writing the necessary code, downloading the model, and letting it churn through millions of Reddit posts and comments (over the course of nearly a year) I finally had the raw building blocks of the conspiracy graph.

Grounding Knowledge in Reality

Now that we’ve extracted triples using the model, the next step is to evaluate their quality. Analytics are only as strong as the data behind them. If these triples don’t reflect reality, there’s little value in using them to build a knowledge graph.

Below are a few promising triples the model extracted directly from posts on the r/conspiracy subreddit:

- {"head": "Elon Musk", "type": "occupation", "tail": "agent of the system"}

- {"head": "London", "type": "capital of", "tail": "UK"}

- {"head": "Andrew Tate", "type": "employer", "tail": "CIA"}

- {"head": "WEF", "type": "field of work", "tail": "Human Enhancement"}

- {"head": "Klaus Schwab", "type": "employer", "tail": "BlackRock"}

Many of these results seem plausible for a conspiracy theory forum, but not all of the extracted triples hold up. Below are a few examples that are confusing, unclear, or nonsensical:

- {"head": "bots", "type": "subclass of", "tail": "shills"}

- {"head": "sponsored", "type": "subclass of", "tail": "paid"}

- {"head": "conspiracy", "type": "different from", "tail": "believing"}

- {"head": "senile", "type": "said to be the same as", "tail": "decrepit"}

- {"head": "2", "type": "followed by", "tail": "3"}

These triples are harder to interpret. While it’s possible to see how the model arrived at them, they are unlikely to meaningfully contribute to the knowledge graph. As a result, we need a way to filter out low-quality or ambiguous extractions.

One effective way to improve the reliability of machine learning outputs is to ground them in structured, human-curated knowledge. To do this, I chose to leverage Wikidata, a free, open, collaborative knowledge base of structured data about real-world entities that serves as the central data hub for Wikipedia and other Wikimedia projects.

We can take each extracted triple and attempt to match its subject and object to corresponding Wikidata entities. If both return a sufficiently close match, we replace the LLM-generated entities with their Wikidata counterparts. This approach serves multiple purposes.

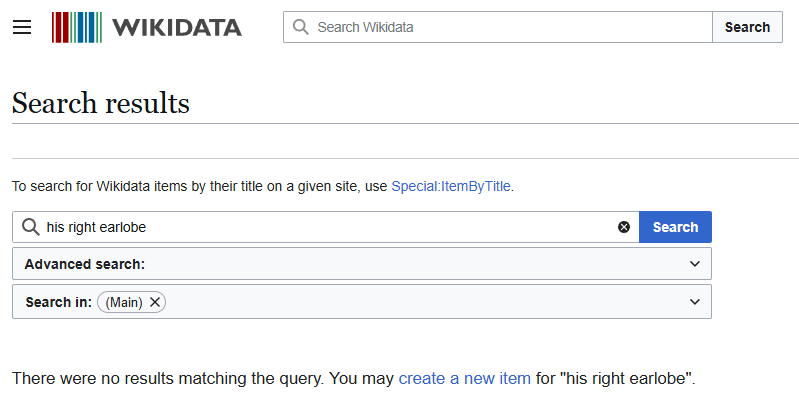

First, it helps remove incoherent or ambiguous entities produced by the model. For example, the model once extracted "his right earlobe" as a triple subject from a post on r/conspiracy discussing the "Single-bullet Theory" of the JFK assassination. This kind of entity isn’t meaningful for a knowledge graph, and since it cannot be linked to any concrete Wikidata entry, it is filtered out.

Second, it allows us to standardize entity names across triples. Different mentions like "CIA", "Central Intelligence Agency", or "The Agency" all refer to the same organization. By mapping them to the same canonical Wikidata entity, we ensure consistency and make the resulting knowledge graph much more reliable and easier to analyze.

Wikidata results for "his right earlobe". No results means it'll be dropped from the knowledge graph.

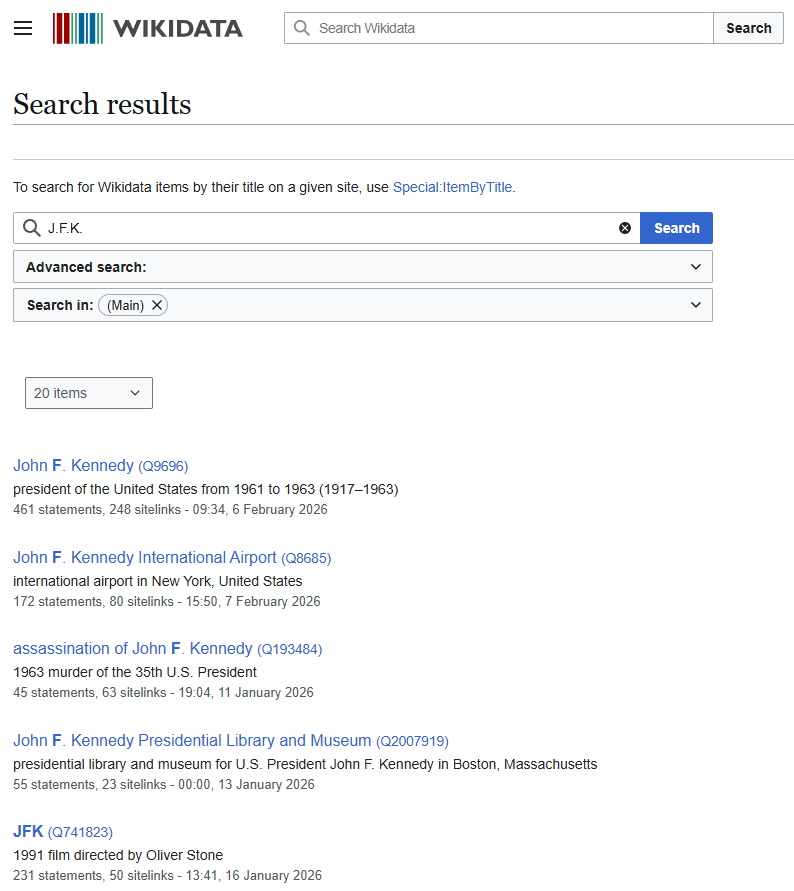

But what happens when a result is close, but not an exact match? For instance, the model frequently extracts “J.F.K.”, how do we know it refers to the president rather than something else?

The challenge is compounded by ambiguity: “J.F.K.” could refer to the former president, an airport, a movie, an assassination, or countless other things. Our task is to reliably select the correct entity across millions of examples, which makes simple string matching insufficient.

Wikidata results for "J.F.K.".

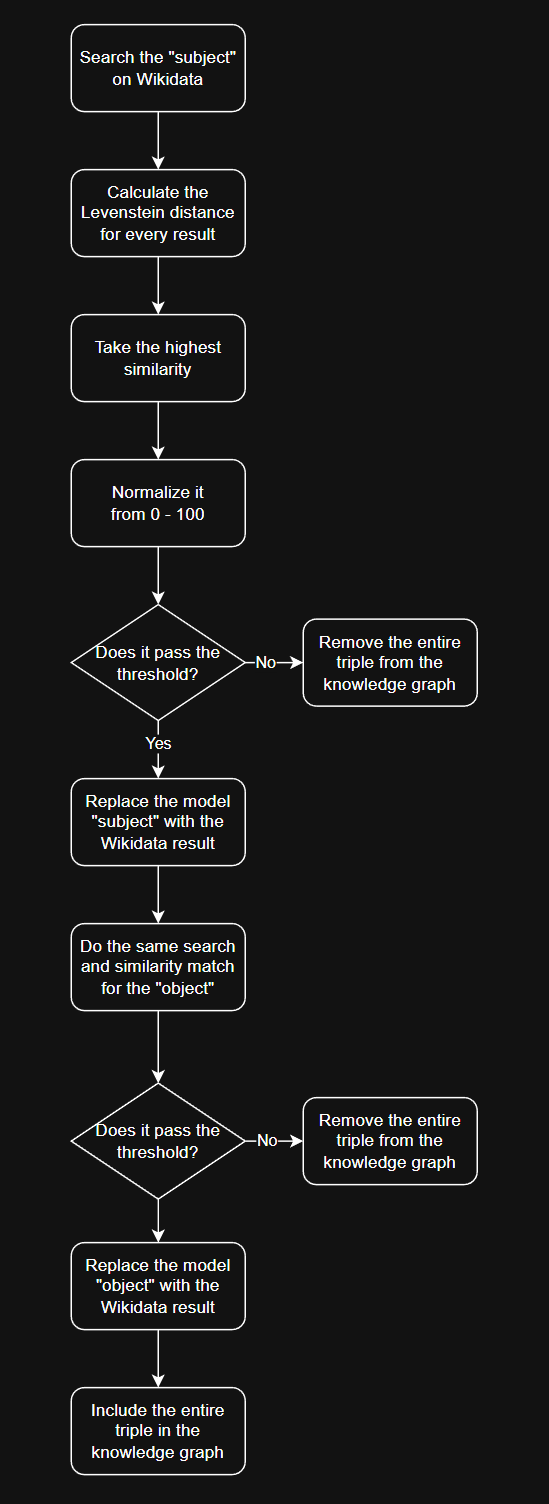

We can handle this problem with a simple process for searching Wikidata:

First, we search for the subject of a triple on Wikidata. We look through the results and pick the one that is most similar. If it’s a close enough match, we use the Wikidata entry; if not, we discard the triple. We repeat the same process for the object.

To do this at scale, we write code that automatically performs these searches across millions of triples. Because Wikidata doesn’t allow automated scraping, the code mimics a human using a web browser like Firefox, so it appears like normal browsing.

The code uses Levenshtein distance to measure how similar each result is to our original term. This method counts the minimum number of single-character edits needed to turn one string into another. The allowed edits are:

- inserting a character

- deleting a character

- replacing a character

To see how this works in practice, consider the words "cat" and "cut". To turn "cat" into "cut", we replace the letter a with u, which counts as a single edit. This gives a Levenshtein distance of 1.

Now take the words "book" and "back". Here, we need to replace the first o with a and the second o with c, resulting in two edits. The Levenshtein distance in this case is 2.

Intuitively, a distance of 0 means the strings are identical, a small distance indicates the strings are very similar, and a large distance shows they are quite different.

For each Wikidata search, the script calculates the Levenshtein distance for all results and picks the one that is most similar to our term. The similarity score is then converted to a simple scale from 0 to 100, where 0 means completely different and 100 means an exact match. If the best match scores above a set threshold, such as 70, we accept it as a valid match and move on to the next search.

The entire process described above, drawn as a process map.

With the above process finished, we now have the means to construct the final graph.

Cartography Meets Psychology

Graph construction begins by combining all entities and edges to render the network. While I will walk through a simplified example here, the actual process operates on a much larger scale.

The first step in the process is to remove self-referencing entities. For instance, if the node "Elon Musk" is connected to itself, that connection is discarded. Self-references can be meaningful in some graphs. For example, in a protein regulation network, a protein that regulates itself (known as autoregulation) should be preserved. In our graph, however, self-references are removed because they do not provide additional insight.

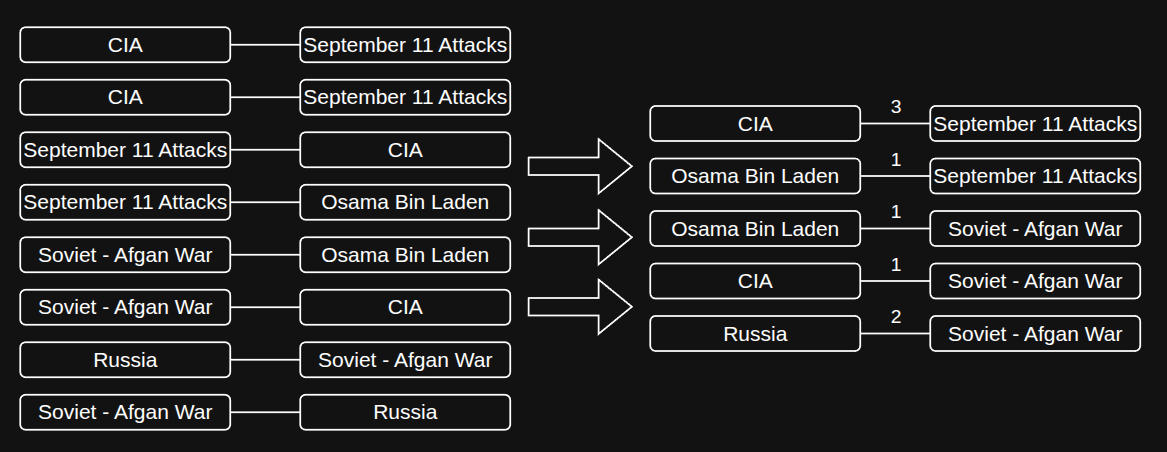

The second step is to define the “strength” of the relationship between entities. For example, if the entities "Russia" and "China" appear together in the graph, how closely are they related? To quantify this, we count all occurrences of each distinct node pair, treating the order of nodes as irrelevant (so "CIA" connected to "Osama Bin Laden" is counted the same as "Osama Bin Laden" connected to "CIA"). This yields a raw numerical count that represents the relationship’s strength.

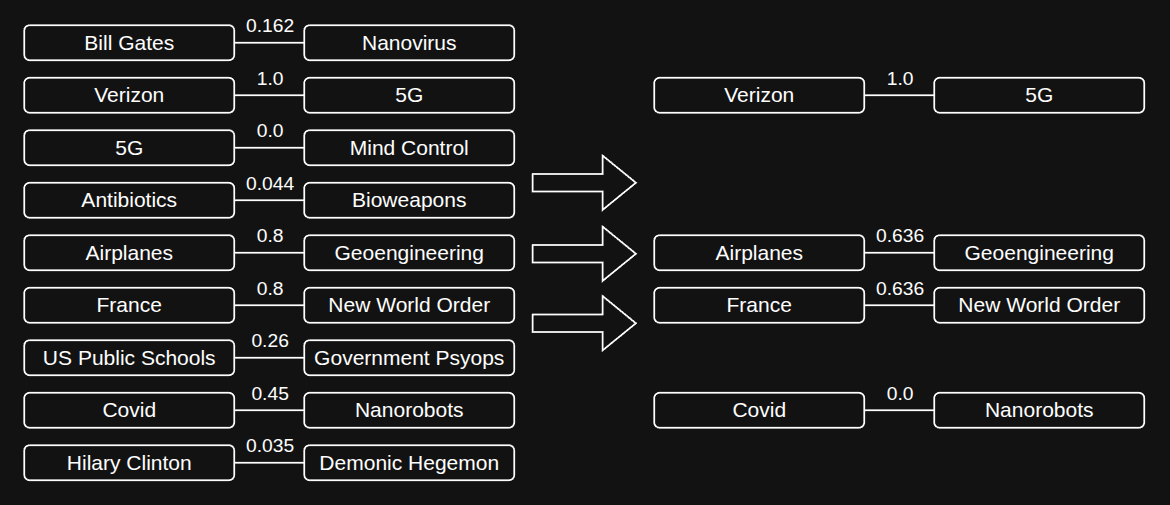

Visualization of the second step in the graph creation process, where raw edge strengths are calulated.

It is important to remember that each extracted node pair reflects a co-occurrence in a sentence or paragraph. The underlying assumption is that the more frequently two concepts are mentioned together, the stronger their conceptual link. This relationship is captured mathematically as an integer representing the connection’s weight.

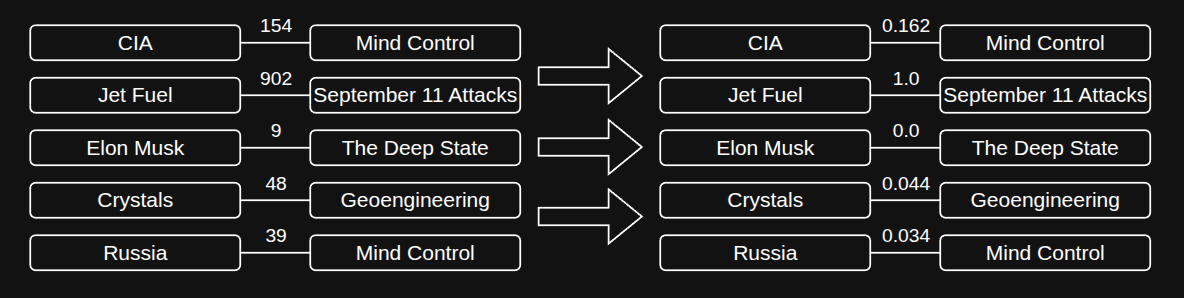

The third step is to normalize the edge strengths. Raw values like 40, 592, or 5,000,020 are difficult to interpret on their own, so we convert them to a scale between 0 and 1 using min-max normalization. This method rescales all values based on the minimum and maximum in the dataset, so the smallest value becomes 0, the largest becomes 1, and everything else is proportionally adjusted in between. This transformation makes the data more comparable and easier to reason about across the entire network.

Visualization of the third step in the graph creation process. Normalized values are shown on the right, where the smallest value in the set in 0, and the largest value is 1.

Normalization gives us an intuitive sense of connection strength. For example, a normalized value of 0.92 clearly indicates a strong relationship, while 0.03 indicates a weak one, whereas raw counts like 205 or 5,000,020 are much harder to contextualize. Since our knowledge graph incorporates millions of posts, submissions, and extracted entities, this step is crucial for making the network readable and for highlighting which relationships are truly significant.

The fourth step is to prune the graph by applying a “cutoff” threshold that removes the bottom X percent of relationships based on their normalized strength. This focuses the analysis on the most meaningful connections.

The first reason for pruning is to eliminate low-strength relationships, which are essentially noise. These weak connections contribute little to understanding the network’s structure and can obscure the more significant relationships. The second reason is computational efficiency. Without pruning, the graph contains over 260,000 nodes and more than 1.4 million edges, requiring substantial memory and processing power. By removing weaker edges, we reduce these demands, making downstream analysis feasible on standard hardware.

Visualization of the fourth step using a threshold of .40. All pairs less than this are dropped, and the node pairs on the right are renormalized again.

After applying the cutoff threshold, we remove the low-strength nodes and edges and then renormalize the remaining graph. For example, if the threshold is set at 0.4, all pairs with a strength below 0.4 are removed, and the remaining edge strengths are rescaled so that 0.4 now maps to 0, just like in the earlier normalization step.

In practice, I generated two versions of the graph: a small graph and a large graph. The small graph uses a high threshold above 90%, keeping only the top 10% of connections. This makes it ideal for focused analysis of the strongest relationships. The large graph, on the other hand, only removes the bottom 10%, retaining most of the relationships. While it is less practical for detailed analysis, it’s great for exploring the overall structure of the network and creating visually interesting representations.

To be continued...

Analysis of a Collective Conciousness

To be continued...

Our Voyage Concludes

To be continued...

Learn More

- Dietrich Deep Dives: The Psychology of Conspiracy Theories

- Mindscape 150 | Simon DeDeo

- Pushshift Reddit Archives

- Pushshift Github

- REBEL: Relation Extraction by End-to-End Language Generation

- 3Blue1Brown: Machine Learning

- Jay Alammar: The Illustrated Transformer

- Gephi - Introduction to Network Analysis and Visualization